(Previously titled: Peer Scan: Leveraging Augmented Reality Technology and Crowdsourced Feedback to Help Learners Navigate to Effective Materials for Self-Access Language Learning)

Curtis Edlin, Kanda University of International Studies (Chiba, Japan)

Euan Bonner, Kanda University of International Studies (Chiba, Japan)

Edlin, C., & Bonner, E. (2018). Augmented reality and crowdsourced feedback to help learners navigate self-access materials. Relay Journal, 1(2) 429-440. https://doi.org/10.37237/relay/010215

Download paginated PDF version

*This page reflects the original version of this document. Please see PDF for most recent and updated version.

Abstract

Learning is a life-long activity which neither begins nor ends with a learner’s time in formal educational environments. Many learners, however, have difficulty efficiently managing their own learning processes, and this includes knowledgeably selecting effective learning materials, particularly in skill-focused areas such as within language learning. Increasingly, self-access centers and other non-traditional learning environments act as a bridge between formal education and opportunities for life-long learning. This paper details a mobile app called Peer Scan, including what it is, these needs it was developed to address, decisions in the development process, and how it can be used by learners in self-access learning environments to help them select appropriate learning materials. The design and development of the app is meant to alleviate some of the difficulties faced by students in self-access centers in Japan in selecting effective materials. The ongoing design seeks to eliminate friction and barriers to use to support uptake, harnessing augmented reality object recognition technology to do so. Peer Scan is designed to allow learners to both see peer assessments of materials as an immediate means of support, and also to implicitly develop useful inner criteria for material selection that can aid them later as life-long learners.

Keywords: self-access centers, augmented reality, crowd-sourcing, materials

Peer Scan is a mobile app developed by Euan Bonner. It was initially devised as a learner support app in response to difficulties students face in selecting useful materials in the Self-Access Learning Center (SALC) at Kanda University of International Studies (KUIS) and potentially at other self-access centers (SACs) in Japan.

Learners, naturally, want to use good materials and in self-access language learning (SALL) environments they often need to choose materials for themselves. In the absence of a strong understanding of either what constitutes good materials or how to identify them, some students may look to recommendations from people they see as authorities, such as learning advisors and language teachers. While learning advisors and teachers can and do certainly help students locate materials that are useful for them, eternally relying on advisor or teacher support does not lead to sustainable, self-efficacious, life-long learning processes.

Another approach learners can take when searching for helpful resources, but absent a well-defined set of criteria in which to assist them in evaluating a resource, is to look at what materials were considered useful by their peers who have come before them. Peer Scan is made to support this process. By downloading the Peer Scan app onto their Android or iOS devices, learners can point their device cameras at the covers of materials to immediately access peer ratings and reviews without needing to press any buttons or enter any search criteria. Through the use of several rating categories applied to each kind of material, learners can both leave their own simple ratings of the material and see averaged results from all other app users at their particular SAC. Over time, ratings for a given material are more likely to reflect the average responses of the entire peer group. There can be several categories of ratings, which can be customized according to type of material and the needs of a particular SAC. Learners can use this information to help them discern if a material is likely to be useful for them. Furthermore, if center is using contextually effective criteria for creating ratings, learners can implicitly learn what to look for in effective materials by both seeing the materials evaluations of others and using the ratings categories as a guide for how to evaluate a material’s effectiveness themselves.

Needs

Peer Scan was conceptualized to help learners in SACs be able to quickly assess materials as informed by their peers. In its prior location, the SALC at KUIS at times hosted over 10,000 physical materials. One way to help learners to access good materials within a SAC is to carefully curate the selection of materials available, making it more likely they will encounter useful materials as they search, and less likely they will come across materials poorly suited to SALL. While this can be useful for learners during their time within the SAC, it may also prove less effective in helping them develop as autonomous learners who can manage their own learning in the long run when other, outside, and later contexts are considered. Ideally, learners would both have support navigating to good materials more immediately, and then also be able to develop the ability to critically assess and choose materials for themselves in the in a multitude of contexts. These are the dual effects that Peer Scan was designed to help achieve.

Good SALL materials

Ongoing considerations around good materials and connecting students to those materials arose from prior internal projects and research by learning advisors (LAs) in the KUIS SALC. Acknowledging that what constitutes a good material for SALL may be different than what is useful in a classroom context, and trying to help curate physical materials collections, a group of LAs considered using various checklists for both self-access and classroom contexts (Cunningsworth, 1995; Gardner & Miller, 1999; Harmer, 2001; Mukundan, 2011; Reinders & Lewis, 2006). The group of LAs eventually decided that the most contextually appropriate checklist to be used for materials ordering and curation purposes in the SALC would be that from Reinders and Lewis (2006). For the purposes of Peer Scan, elements that could be important to learners once in the curated collection and which may be evaluated in more than just a binary way after using a material are particularly important as they are a way for learners to practice evaluating varying levels of quality of resources.

Localized considerations

In investigating what learners value in materials in the KUIS SALC, the LAs asked learners about how they valued categories thought to important to self-access language learning materials. The categories were identical to those Reinders and Lewis had used in a previous investigation in the English Language Self-Access Learning Centre (ELSAC) at the University of Auckland (Reinders & Lewis, 2006: see Appendix). They found that the considerations were not largely different, with two exceptions. KUIS SALC learners found guidance on how to use a material (tell me how to learn best) to be far less important as a consideration in choosing a material in comparison to ELSAC learners, and they considered physical condition or aesthetic of a material (how it looks) to be significantly more important than their ELSAC counterparts. Most of the other categories of the good materials checklist were still considered to be important, with some variation. This helps to understand possible learner variation across SACs and the need for a customizable system. Also, it could be worth helping learners develop a valuing of criteria and structures that can support using materials effectively, or at the very least cautioning against primarily judging a book by its cover.

Ability to evaluate criteria and select materials

Even in cases in which learners value some certain quality of materials, it is not always easy to tell by cursory inspection how a material rates against a particular criterion. In fact, another exploratory study on learner outlooks toward and their use of resources in the SALC (Edlin, forthcoming) found that between KUIS SALC users who had taken SALC curricula as well as those who had not, there was little variation in how the learners valued most qualities in materials. However, those who had been exposed to SALC curricula assessed the quality of materials as significantly better in nearly all materials sections. This could be a result of being able to identify and choose better materials, noticing richer semiotic budgets and their affordances within the materials, capitalizing on those affordances with more effective learning strategies, or some combination of all these things. Assuming not all learners take SALC curricula, as they are elective courses, it would be beneficial to find ways to help more learners be able to locate and select effective materials and notice more of the affordances in materials in both the short term and in the long run.

In the immediate sense, when learners are browsing the shelves, feedback along categories considered to be indicative of effective materials for SALL from peers who have used a resource would be useful. These ratings can help learners assess how well a material might fulfill a criterion which they already value but find difficulty evaluating. In the case that learners are overlooking some useful criteria, assessing materials against those criteria could also be of help to them in the long run. There is the potential of missing certain semiotic cues and thus simply not recognizing useful affordances in a material. By displaying and prompting learners to evaluate particular categories relating to experience with a material, the learners may be able to better recognize a material’s affordances. This should support implicit learning and develop an understanding of targeted criteria to use in assessing and choosing effective materials, which is a skill that learners can take with them even once they graduate and are no longer present in a SAC, supporting them in further opportunities as life-long learners.

Design Process

The initial concept for Peer Scan was developed according to the research on good materials in self-access and our learners’ needs as mentioned above. From there, the design and development process had to take numerous considerations into account in order to develop a tool that could be widely and easily adopted by learners, all the while obviating barriers to use in order to produce as little friction as possible.

Platforms

A device questionnaire conducted with students at KUIS found that all students possessed a smart phone with the majority of the student body owning an Apple iPhone (about 75%) with the rest owning an Android device (close to 25%). In addition, the university required all students to purchase an Apple iPad, ensuring that all students have at least one device that is capable of supporting the Peer Scan app. Thus, the decision was made to support iOS and Android as the primary platforms for the app.

Technological choices

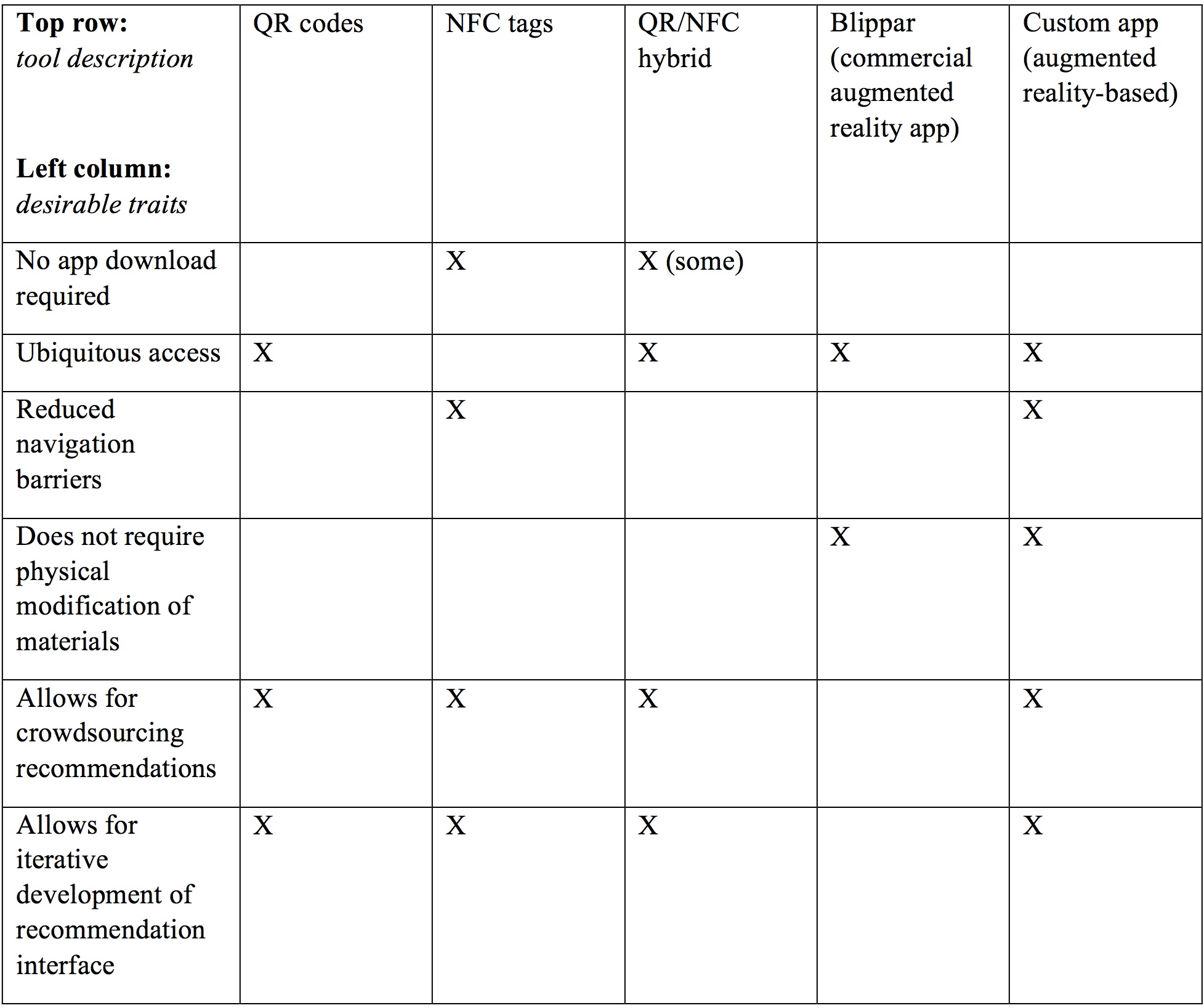

Before considering the interface that would enable accessing and viewing material ratings and leaving responses, a range of different technologies was evaluated. In the initial exploration of tools, we identified QR codes, NFC tags, and the commercial augmented reality app Blippar as ways to provide users access to the review system. The pros and cons of the various systems were considered (Table 1) and ultimately it was found that developing a new app would be the most reasonable approach and potentially keep friction experienced by end users to a minimum.

Table 1: Solutions Considered and their Respective Desirable Traits within a SALC Context

App and interface

Peer Scan, having been designed to work on tablets and smart phones, has a dynamic user interface that scales text size depending on the platform used. This enables users to have an equally compelling experience regardless of their method of access. As a result, its usage cases are not limited to universities that require students to own an Android or iOS tablet, as any smart phone will also suffice. The app can be deployed to any SAC, library, or learning environment where the use of smart devices is common.

The app’s user interface is focused on providing students with information related to specific materials as quickly and with as little friction as possible to encourage its use by the largest number of users. It was quickly determined that the more barriers to entry that were placed in front of students, the less likely the app would achieve the critical mass of users needed for it to fulfill its purpose of providing peer ratings to all learners.

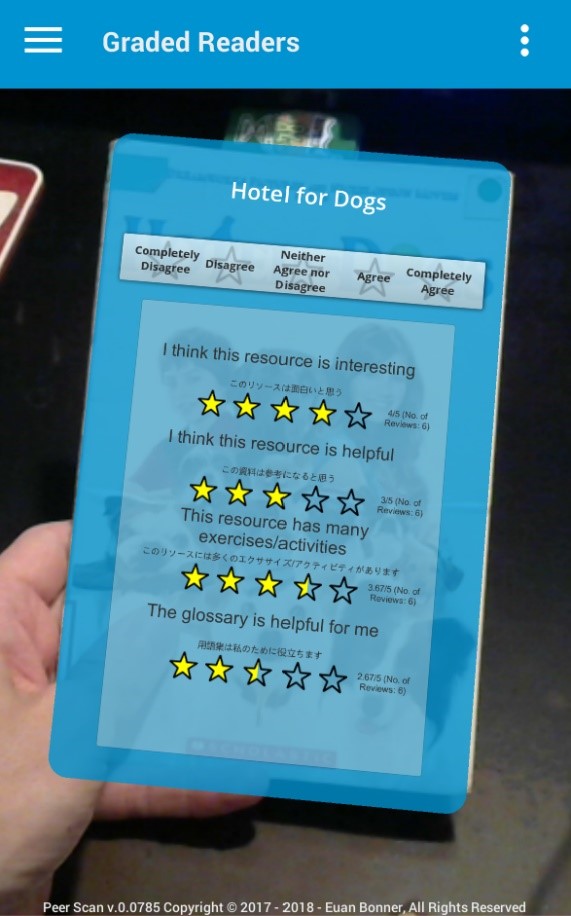

With this in mind, a user sign-up and login system was eschewed in place of a simple option to choose a learning environment from a searchable list. This information is then saved, and users are not required to repeat its entry every time they use the app. During the course of regular usage, users need only select which part of their learning environment they are currently in and then point their device cameras at the covers of the materials to have the ratings information superimposed onto the material via augmented reality (Figure 1). The need for entering which part of the learning environment the user is currently in is born out of a technological limitation necessitating no more than 1,000 materials being recognizable at any one given time. It is expected that as smart devices continue to improve, eventually entire SAC material catalogues should fit within device memories without the need to select a particular section or area.

Figure 1: Early App Development Screenshot Showing Material Rating Information Superimposed onto the Cover of a Graded Reader

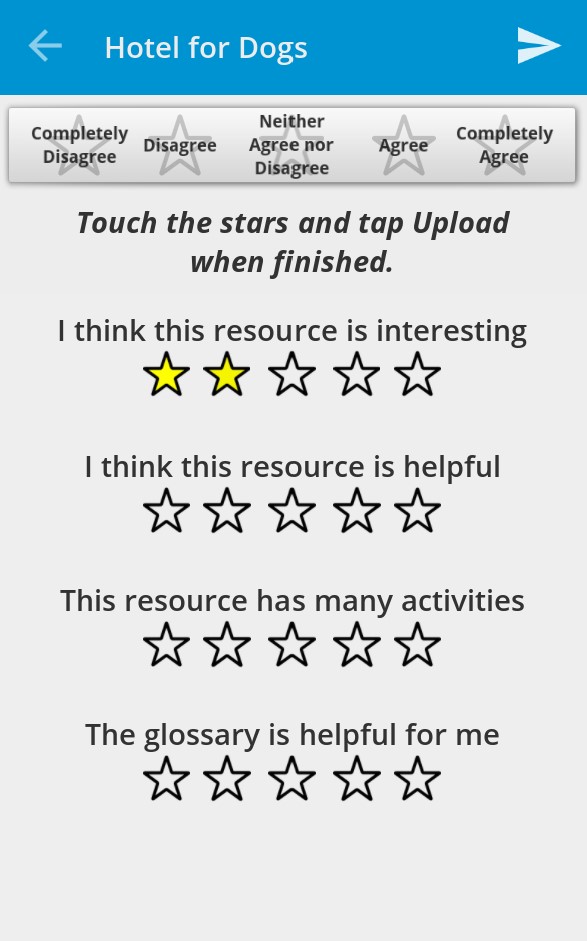

It is expected that most users of the app will browse through many materials before settling on one that they wish to learn more about. Peer Scan was designed using augmented reality both to instantly recognize materials without the need to enter titles or codes, and to allow users to see the ratings information on the materials’ covers directly, removing the need to press any buttons to display and disregard information shown. By simply placing a material in front of the device camera, the title, rating categories, and average star ratings for each category are shown. This information takes the form of an average of all the scores the material has received in each category, with a score between 1 and 5 presented as a series of colored stars. If users wish to see more information, or leave their own ratings, they simply touch their smart device screens to access it and leave their own star rating by touching one of the five stars in each category to express their opinion (Figure 2).

Figure 2: Leaving a Review for Other Learners to See by Touching the Stars

When fetching or uploading ratings reviews, the app accesses a secure server online, providing it with the name of the material and learning environment. The server then responds with all the information it has on a material, and in the event of a new review being uploaded, adds that information to the database for other users to immediately access.

Implementation and Use

The Peer Scan app was piloted in 2017 with two classes of freshman reading & writing students engaged in extensive reading projects. The students, as part of their regular classroom activities were required to read a graded reader storybook every two weeks. As a part of a debugging and testing phase for the app, students were asked to leave star review ratings for the materials they read and report any issues they had with their teacher. Since the app relies on recognizing the covers of all materials present in a learning environment, for this phase, it was necessary for all 800 graded readers available in the KUIS SALC to be scanned, digitized, and added to the app database. This included the notation of material titles and formatting of specific information such as publisher, edition, and duplicate materials with dissimilar covers.

The debugging and testing phase uncovered numerous bugs and issues with the app that were addressed, and much valuable information was gathered from student feedback that is being incorporated into future iterations as Peer Scan moves closer to a full release.

Conclusions and Future Directions

Peer Scan offers a novel solution to helping learners develop a better sense of a material’s quality with the help of their peers. It is hoped it will also help learners recognize what may make a material useful to them and help them develop robust inner criteria to evaluate materials against. While getting a center set-up to use Peer Scan may require some frontloading, the experience for end-users is being continuously developed to be as barrier-free and frictionless as possible. In adoption by various centers and programs, there may be opportunities for the system to be further customized, such as by including categories for rating that could prove useful in novel classifications of materials or to new and unique groups of students. Further research by academics, teachers, and staff aligned with different centers could help further inform institutions of what characteristics end up proving useful for their specific learners and subsequently be included. Cooperating institutions may even be able to benefit by having the anonymous responses and ratings for materials shared between institutions to create a more robust set of information for their learners to use with their collections of materials.

Acknowledgements

The authors would like to acknowledge and thank current and former learning advisors Atsumi Yamaguchi, Erin Okamoto, Neil Curry, and Akiyuki Sakai for their prior work to inform material curation policies, which included data and insights that began discussions and helped further highlight some of many possible difficulties learners may experience in selection of SALL materials.

Notes on the Contributors

Curtis Edlin is a learning advisor and co-resource coordinator in the Self-Access Learning Center at Kanda University of International Studies. He holds an MATESOL from SIT Graduate Institute and has been working in language education for over a decade. His research interests include learning environments, learner development, and learning analytics.

Euan Bonner is a senior English Language Institute lecturer at Kanda University of International Studies. He holds a Master of Applied Linguistics with a specialization in TESOL from the University of New England, Australia and has been working in language education for more than 10 years. His research interests include learner engagement and CALL with a focus on mobile app design utilizing augmented and virtual realities.

References

Cunningsworth, A. (1995). Choosing your coursebook. Oxford, UK: Heinemann.

Edlin, C. (2018, June). Our self-access learning centers as places, and their place within a campus environment. Poster presented at Psychology in Language Learning 3, Tokyo, Japan.

Gardner, D., & Miller, L. (1999). Establishing self-access: From theory to practice. Cambridge, UK: Cambridge University Press.

Harmer, J. (2001). The practice of English language teaching. Harlow, UK: Longman.

Mukundan, J. (2011). Developing an English language textbook evaluation checklist: A focus group study abstract. International Journal of Humanities and Social Science, 4(6), 21-28.

Reinders, H., & Lewis, M. (2006). The development of an evaluative checklist for self-access materials. ELT Journal, 60(3), 272-278.

Appendx

Categories for Student Questions for Good Materials in SALL (Reinders & Lewis, 2006)

Good materials:

1 Have clear instructions

2 Clearly describe the language level

3 Look nice

4 Give a lot of practice

5 Give feedback (show answers or let me know how I am doing)

6 Make it easy to find what I want

7 Contain a lot of examples

8 Tell me how to learn best

Dear Curtis and Euan,

The paper starts off with a good and introduction and a clear rationale. I have replied separately with some comments on the text. You haven’t actually told us anything about the types of evaluation/comments learners are asked to provide. I think this would be really useful. Also, do teachers talk about the app/give students practice with it in class? Overall, a great project and one that every SAC should be using!!

Dear Hayo,

Thank you for the comments and feedback. We have made a revision of the article to address a number of things you kindly pointed out, and the revised article should be reflected here shortly.

The types of evaluation are more explicitly stated in the revision, though they are still minimal since the app is still currently being piloted just with graded readers. We expect more evaluative fields to be opened in the future as the system is also applied to other types of resources. Comments and reviews are being discussed, but they may present further additional work in that they may need to be monitored for content.

Hi Curtis and Euan,

Thank you for writing up such an interesting article on the use of AR technology to help language learners select useful materials for their self-access language learning. Your article is an inspiration for me to think about how I can incorporate free AR technologies into my university’s self-access language center. Since our self-access language centre not only hosts a collection of self-access language learning materials, but also routinely organizes language consultations, workshops, and activities held by a group of International Tutors who are recent graduates from universities around the world, it would be fascinating to have an app developed to help our students see the popularity/usefulness of different resources, activities, and maybe even tutors!!

I have two questions related to the app. In Figure 1 and its related paragraphs, students are able to view the rating information by scanning learning materials on the application. I wonder if students can get access to more qualitative information about the materials because some students may want to read from the experience of their fellow schoolmates about not only how useful the resources are, but how they used the resources to aid their language learning. Also, is it possible for lecturers or language advisors to leave comments on the app? I think it will be helpful to students if they can have access to teachers’ recommendations as well.

I see a lot of potential in using this AR app and extending its use in a self-access language center. You mentioned near the end of your article that ‘further research by academics, teachers, and staff aligned with different centers could help further inform institutions of what characters end up proving useful for their specific learners’; I am very interested in collaborating with you to have a dry-run of a version of this app in my university. What do you think? I can be reached at iswchong@eduhk.hk. I look forward to hearing from you!

Cheers,

Sin Wang Chong

Hi Sin Wang Chong,

Thank you for the kind comments and for your questions. The currently iteration does not have many qualitative features included, though it may be a possibility in the future. One consideration regarding written comments is the need for curation. University students are not always as mature as we might hope, especially when anonymous (or perhaps this is a rule for people in general). Opening comments may necessitate the need to curate comments/recommendations. Secondly, to view the comments at a reasonable size on a smart device would require some new interface designs to allow for menus, scrolling, etc. This could certainly be possible, but it starts to move away a bit from the initial barrier-free perspective and would require more development time and testing.

All in all, I would look at each version as an iteration moving forward. The considerations above do not preclude features from being developed necessarily, but they do need to be thought over. For example, who will curate comments for an institution? If we include reviews or recommendations, how do they display and how do we keep initial barriers to a minimum? How much time does it take to develop that?

I am very happy to hear all your enthusiasm. I will make sure that Euan sees your comment so he can get in touch when he has time. He is the real technical and creative power behind the project. I am sure he can inform you better of the technological capabilities and limitations (given time and resources) than I can.

Thank you again for your comments and questions.

Kind regards,

Curtis Edlin